Total Ownership Clarity.

Optimized from Day 1.

Deploy mainstream and/or custom LLM models locally with complete control. Full data sovereignty, zero external dependencies!

Deploy mainstream and/or custom LLM models locally with complete control. Full data sovereignty, zero external dependencies!

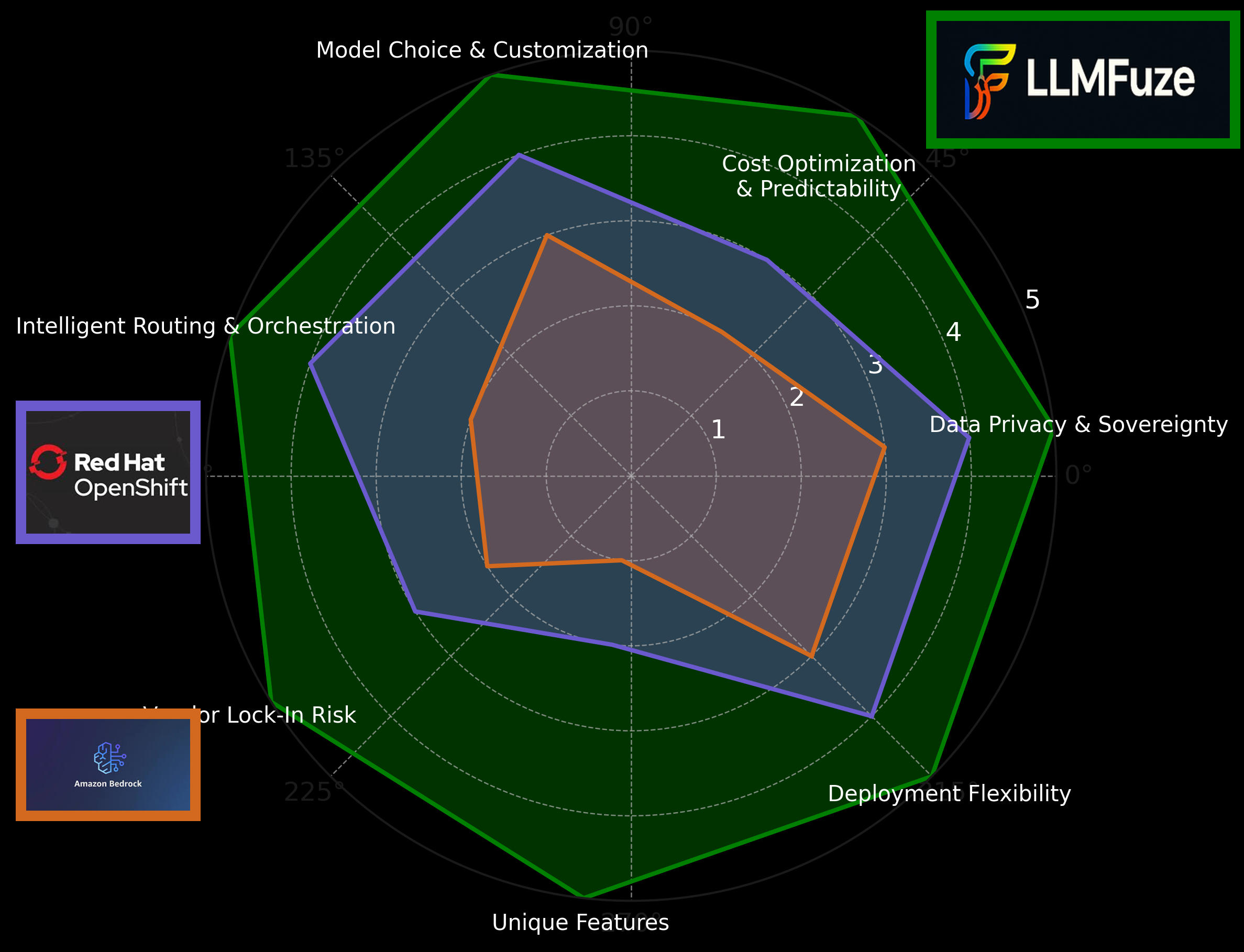

In a landscape dominated by walled gardens and complex managed services, LLMFuze empowers you with unparalleled control, transparency, and cost-efficiency. See how we stack up against common alternatives:

| Feature / Differentiator | LLMFuze | AWS Bedrock | Red Hat OpenShift AI |

|---|---|---|---|

| Intelligent Routing & Orchestration (RRLM) | 🟢 Adaptive, learning-based routing | 🟠 Basic routing; API gateway features | 🟠 Workflow orchestration (Kubeflow) |

| Deployment Flexibility | 🟢 Edge, Blend, Cloud – Total Control | 🟠 Primarily Cloud (Managed Service) | 🟢 On-Prem, Hybrid, Cloud (OpenShift) |

| True Data Privacy & Sovereignty | 🟢 Maximum with Edge & TP Add-on | 🟠 Managed service; data policies apply | 🟠 Strong on-prem; cloud policy dependent |

| Cost Optimization & Predictability | 🟢 Superior ROI with Edge; RRLM | 🔴 Usage-based; complex to predict | 🟠 Platform subscription + resource usage |

| Model Choice & Customization | 🟢 BYOM, OSS, Fine-tuning, Private GPT-4 | 🟠 Curated FMs; limited BYOM | 🟢 Supports various models; MLOps focus |

| Vendor Lock-In Risk | �� Minimal; open standards | 🔴 Higher; deep AWS integration | 🟠 Moderate; OpenShift platform tied |

| TrulyPrivate™ GPT-4/Advanced Models | 🟢 Unique Add-on for secure VPC hosting | 🔴 Not directly comparable; public APIs | 🔴 Not directly comparable |

| AI-Assisted Development Protocols | 🟢 DISRUPT Protocol: 95% success rate, enterprise-grade | 🔴 No structured development methodology | 🔴 No AI development workflow automation |

| Speed to Innovation | 🟢 Rapid with Cloud; strategic depth with AI workflows | 🟠 Fast for standard FMs; customization slow | 🟠 Platform setup required; MLOps robust |

LLMFuze offers the freedom to innovate on your terms, with your data, under your control.

Whether you deploy Edge for full control, Blend for hybrid agility, or Cloud for rapid orchestration, LLMFuze ensures you know your numbers. No mystery costs. Just the freedom to choose the right fit—backed by data.

Try our Demo interface powered by the LLMFuze Edge deployment. This live demo showcases our local AI capabilities with complete data sovereignty and zero external API dependencies. Available personas will load dynamically based on current deployment.

Running locally with full privacy and control

Loading deployment information...

No data leaves your infrastructure

GPU-accelerated inference on-premises

Senior Developer, Architect, Legal Team

Ready to take control of your AI strategy? LLMFuze offers flexible solutions tailored to your needs. Schedule a 1-1 meeting with us to see how we can help you find the perfect plan.